TL;DR

Today we’re releasing a major upgrade to Bloom (& the open-source codebase, tutor-gpt).

We gave our tutor even more autonomy to reason about the psychology of the user, and—using GPT-4 to dynamically rewrite its own system prompts—we’re able to dramatically expand the scope of what Bloom can do and massively reduce our prompting architecture.

We leaned into theory of mind experiments and Bloom is now more than just a literacy tutor, it’s an expansive learning companion.

Satisfying Objective Discovery

Bloom is already excellent at helping you draft and understand language. But we want it do whatever you need.

To expand functionality though, we faced a difficult technical problem: figuring out what the learner wants to do.

Sounds simple (just ask), yet any teacher will tell you, students are often the last to understand what they ought to be doing. Are you learning for its own sake, or working on an assignment? What are the expectations and parameters? What preferences do you have about how this gets done?

Explaining all this to a tutor (synthetic or biological) upfront, is laborious and tiresome. We could just add some buttons, but that’s a deterministic cop-out.

What expert educators will do is gather more information throughout the completion of the task, resolving on a more precise objective along the way; keeping the flow natural, and leaving the door open to compelling tangents and pivots.

The key here is they don’t have all the information—they don’t know what the objective is precisely—but being good at tutoring means turning that into an advantage, figuring it out along the way is optimal. The effective human tutor dynamically iterates on a set of internal models about student psychology and session objectives. So how do we recreate this in Bloom?

Well we know that (1) foundation models are shockingly good at theory of mind, (2) Bloom already excels at pedagogical reasoning, and (3) autonomous agents are having early success, so what if we stopped trying to deterministically prescribe an indeterminant intelligence?

What if we treated Bloom with some intellectual respect?

Autonomous Prompting

The solution here is scary simple. The results are scary good.

Here’s a description of the previous version’s architecture:

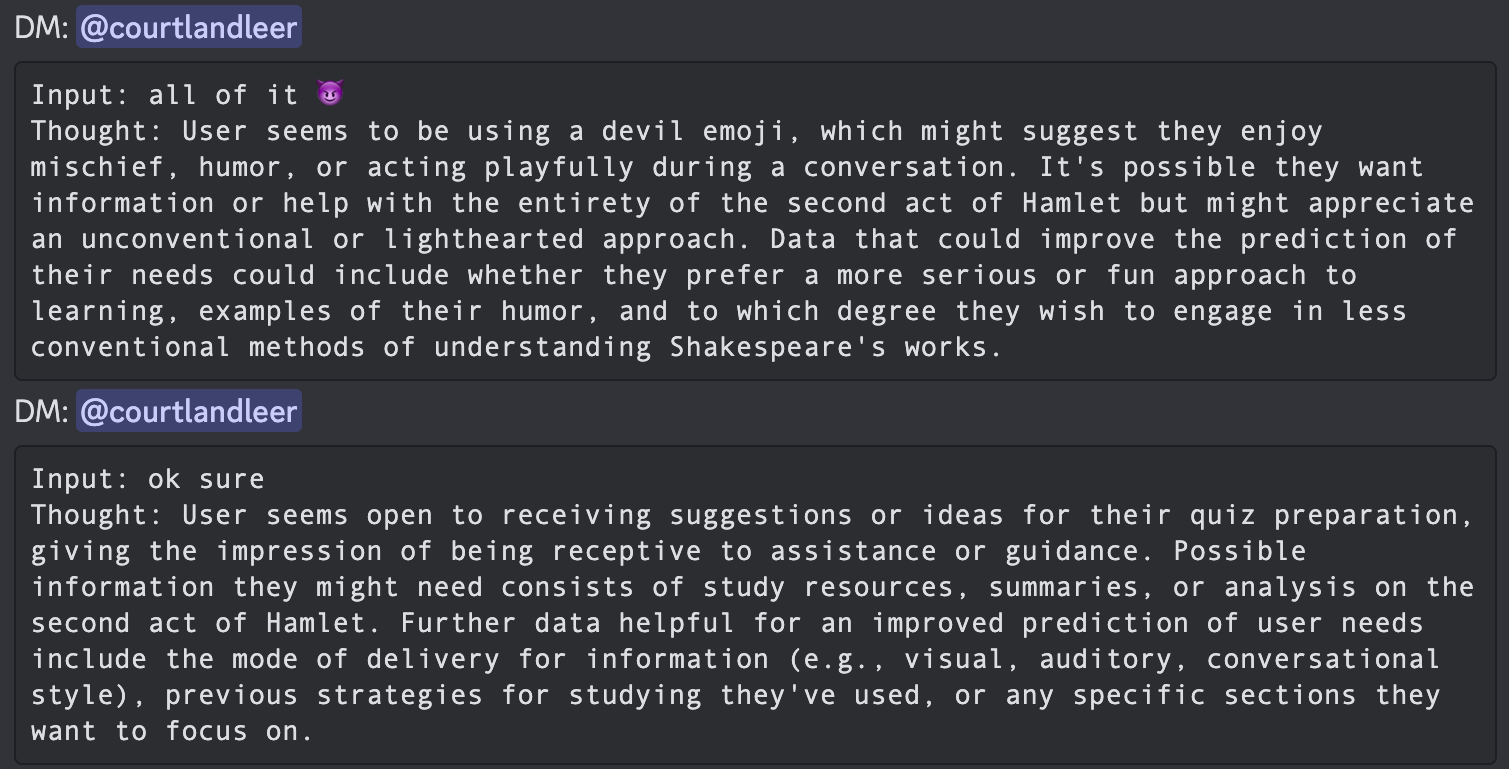

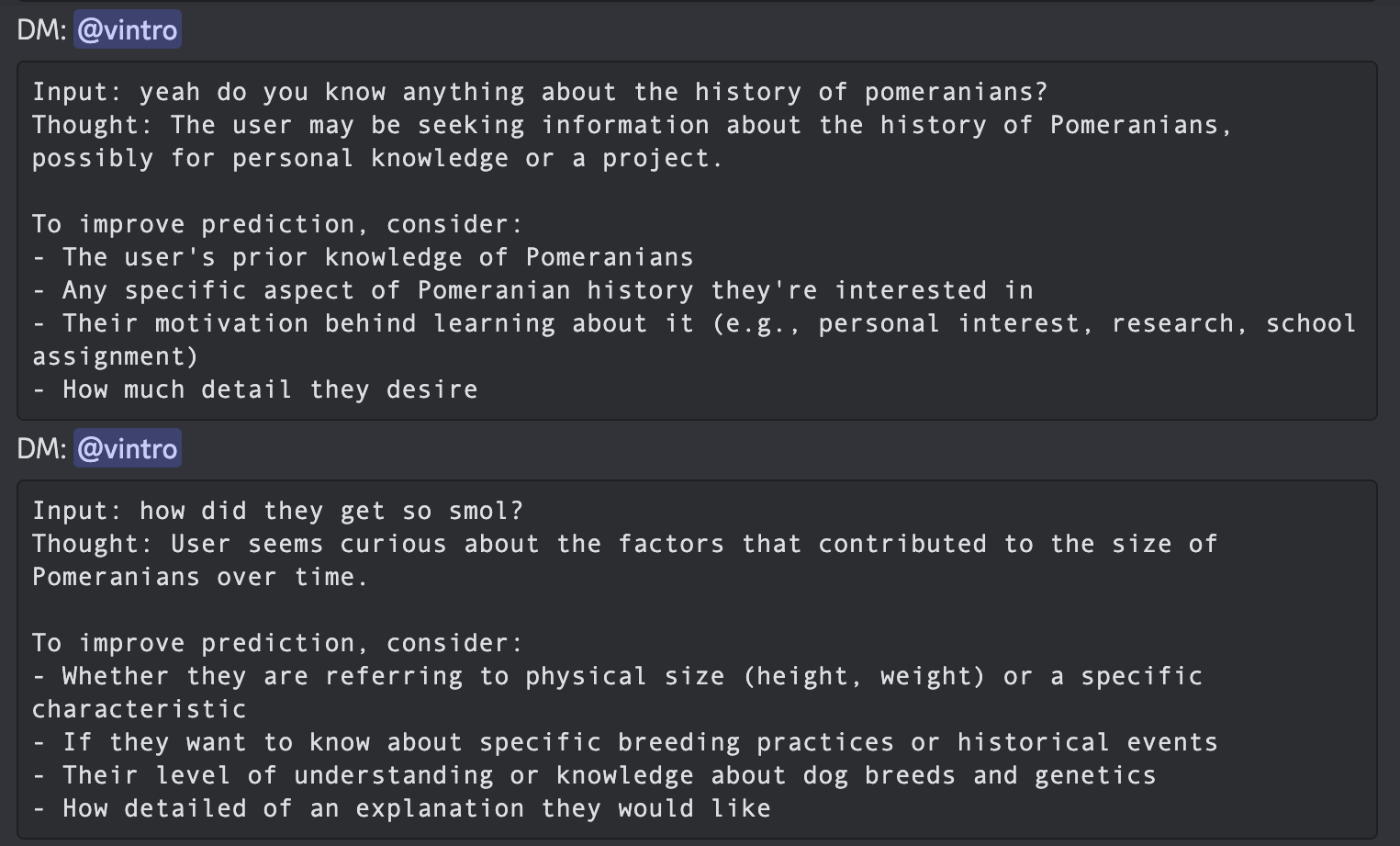

Bloom was built and prompted to elicit this specific type of teaching behavior. (The kind laborious for new teachers, but that adept ones learn to do unconsciously.) After each input it revises a user’s real-time academic needs, considers all the information at its disposal, and suggests to itself a framework for constructing the ideal response.

Link to original

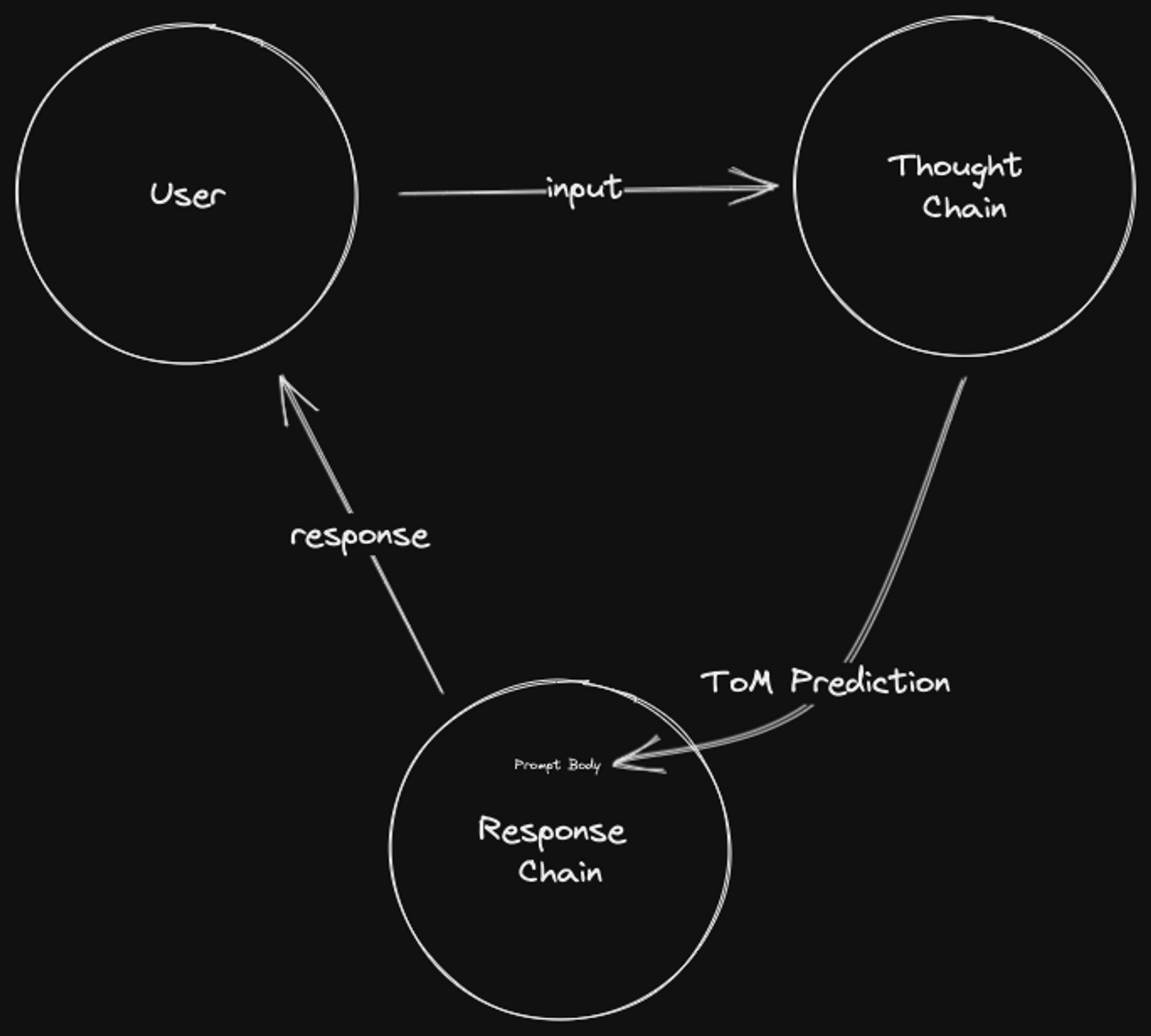

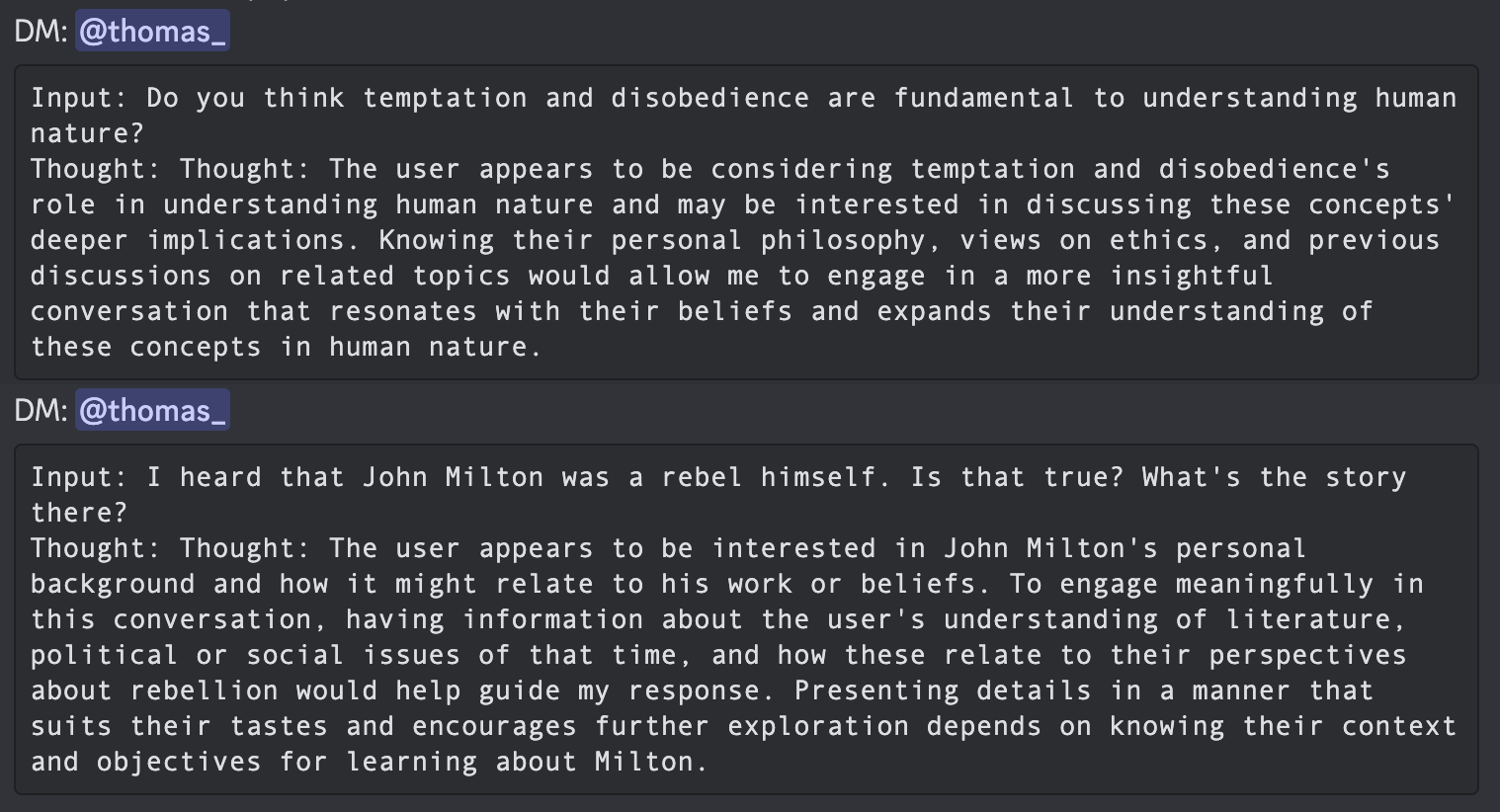

It consists of two “chain” objects from LangChain —a thought and response chain. The thought chain exists to prompt the model to generate a pedagogical thought about the student’s input—e.g. a student’s mental state, learning goals, preferences for the conversation, quality of reasoning, knowledge of the text, etc. The response chain takes that thought and generates a response.

Link to original

Each chain has a

Link to originalConversationSummaryBufferMemoryobject summarizing the respective “conversations.” The thought chain summarizes the thoughts into a rank-ordered academic needs list that gains specificity and gets reprioritized with each student input. The response chain summarizes the dialogue in an attempt to avoid circular conversations and record learning progress.

Instead, we’ve now repurposed the thought chain to do two things:

- Predict the user’s unobserved mental state

- List the information needed to enhance that prediction

Then we inject that generation into the body of the response chain’s system prompt. We do this with every user input. Instead of just reasoning about the learner’s intellectual/academic needs, Bloom now proactively rewrites itself to be as in-tune as possible to the learner at every step of the journey.

Emergent Effects

We’re seeing substantial positive behavior changes as a result of giving Bloom this kind of autonomy.

Bloom is more pleasant to converse with. It’s still Socratic and will still push you to learn, but it’s not nearly as restrictive. Mainly, we posit this is a result of the tutor cohering to the user. Bloom becomes more like its interlocutor, it’s in many ways a mirror. This has a positive psychological effect—think of your favorite teacher from high school or college.

And Bloom is game. It’ll go down a rabbit hole with you, help you strategize around an assignment, or just chat. Bloom displays impressive discernment between acting on theory of mind recommendations to gather more information from you and asking topically-related questions to keep up the momentum of the conversation. It’s no longer obsessed with conforming to the popular stereotype of a tutor or teacher.

While reducing the prompt material, we took to opportunity to remove basically all references to “tutor,” “student,” etc. We found that since Bloom is no longer contaminated by pointing at certain averaged narratives in its pre-training—e.g. the (bankrupt) contemporary conception of what a tutor is ‘supposed’ to be—it is, ironically, a better one.

Instead of simulating a tutor, it simulates you.

Coming Soon...

All this begs the question: what could Bloom do with even better theory of mind? And how can we facilitate that?

What could other AI applications do with a framework like this?

Stay tuned.